AI for Lawyers: Complete Guide for Legal Professionals

Table of Contents

- The Ethical Framework: ABA Formal Opinion 512 Explained

- Technology Competence: What Lawyers Must Know Now

- Types of AI Tools Transforming Legal Practice

- Court Disclosure Requirements and Judicial Orders

- The Hallucination Problem: Lessons from Mata and Beyond

- Confidentiality Risks and Data Security Concerns

- Supervising AI Use: Rules 5.1 and 5.3 Obligations

- Candor to Tribunals: Rule 3.3 in the AI Age

- Fee Considerations and Rule 1.5 Implications

- Implementation Strategies for Legal AI Adoption

- Developing Complete AI Policies

- Success Stories: AI Implementation That Works

- Common Pitfalls and How to Avoid Them

- The Future of AI in Legal Practice

- Taking Action: Your AI Implementation Roadmap

- The Ethical Framework: ABA Formal Opinion 512 Explained

- Technology Competence: What Lawyers Must Know Now

- Types of AI Tools Transforming Legal Practice

- Court Disclosure Requirements and Judicial Orders

- The Hallucination Problem: Lessons from Mata and Beyond

- Confidentiality Risks and Data Security Concerns

- Supervising AI Use: Rules 5.1 and 5.3 Obligations

- Candor to Tribunals: Rule 3.3 in the AI Age

- Fee Considerations and Rule 1.5 Implications

- Implementation Strategies for Legal AI Adoption

- Developing Complete AI Policies

- Success Stories: AI Implementation That Works

- Common Pitfalls and How to Avoid Them

- The Future of AI in Legal Practice

- Taking Action: Your AI Implementation Roadmap

The legal profession faces a transformation with artificial intelligence. In July 2024, the American Bar Association issued ABA Opinion 512, offering ethical guidance on AI in legal practice, impacting how lawyers should approach technology competence. Over forty states have incorporated technology competence language in Rule 1.1’s Comment [8], meaning attorneys can’t ignore AI tools. A Stanford database documents over 80 cases involving AI misuse, with courts imposing sanctions. Yet, AI legal technology presents opportunities for efficiency, cost reduction, and improved client service.

The Ethical Framework: ABA Formal Opinion 512 Explained

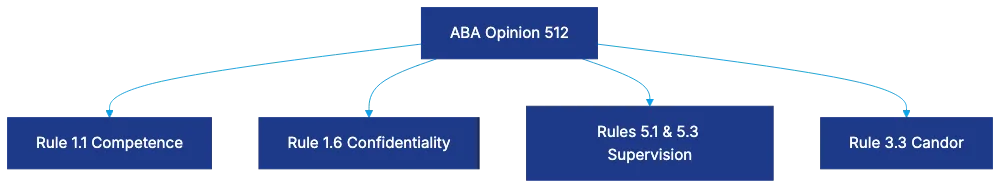

ABA Formal Opinion 512 marks a shift in legal ethics AI. Released in July 2024, it addresses Model Rules on generative AI: Rule 1.1 (Competence), Rule 1.6 (Confidentiality), Rules 5.1 and 5.3 (Supervision), Rule 3.3 (Candor to the Tribunal), and Rule 1.5 (Fees). Lawyers must understand generative AI, including concepts like large language models, training data, and hallucinations. Confidentiality is crucial; clients must give informed consent before their data is used in AI systems. Firms must create policies rather than rely on individual experimentation.

ABA Opinion 512 Ethical Framework:

Technology Competence: What Lawyers Must Know Now

Rule 1.1’s Comment [8] requires technology competence. Lawyers need to understand AI architectures, tool limitations, and data handling. Misusing an AI tool trained on outdated data risks malpractice. Understanding how vendors handle client data is crucial.

Technology competence involves asking the right questions and verifying outputs. Lawyers not explaining their AI technology may risk its use.

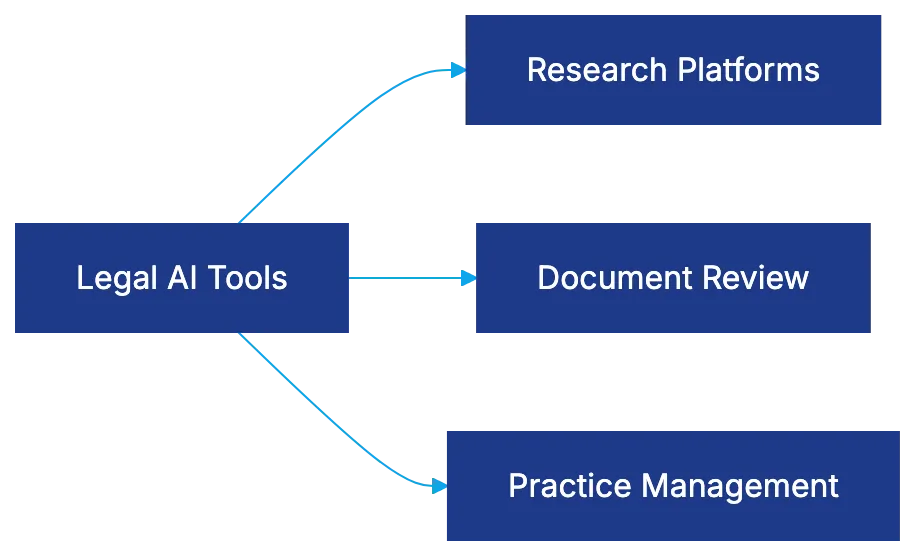

Types of AI Tools Transforming Legal Practice

AI Legal Tools Categories:

Artificial intelligence for attorneys includes:

- Legal Research Platforms: Tools like Westlaw’s AI-Assisted Research and LexisNexis Lexis+ AI find relevant cases and statutes, requiring verification.

- Drafting Assistance Tools: These, like Harvey AI, generate legal documents but don’t replace legal judgment.

- Document Review AI: Assists discovery in complex litigation, cutting costs by up to 70%.

- Practice Management AI: Manages tasks like scheduling and intake, posing lower ethical risks.

- Predictive Analytics AI: Estimates case values and predicts outcomes, raising questions about statistical predictions as legal advice.

Court Disclosure Requirements and Judicial Orders

After the Mata v. Avianca incident, over 140 judges issued standing orders on artificial intelligence for attorneys. The RAILS AI Orders Tracker at rails.legal monitors this. Orders vary, requiring disclosure when AI tools are used or mandates specific tool identification, presenting a compliance challenge. Consequences for non-compliance range from sanctions to case dismissals.

The Hallucination Problem: Lessons from Mata and Beyond

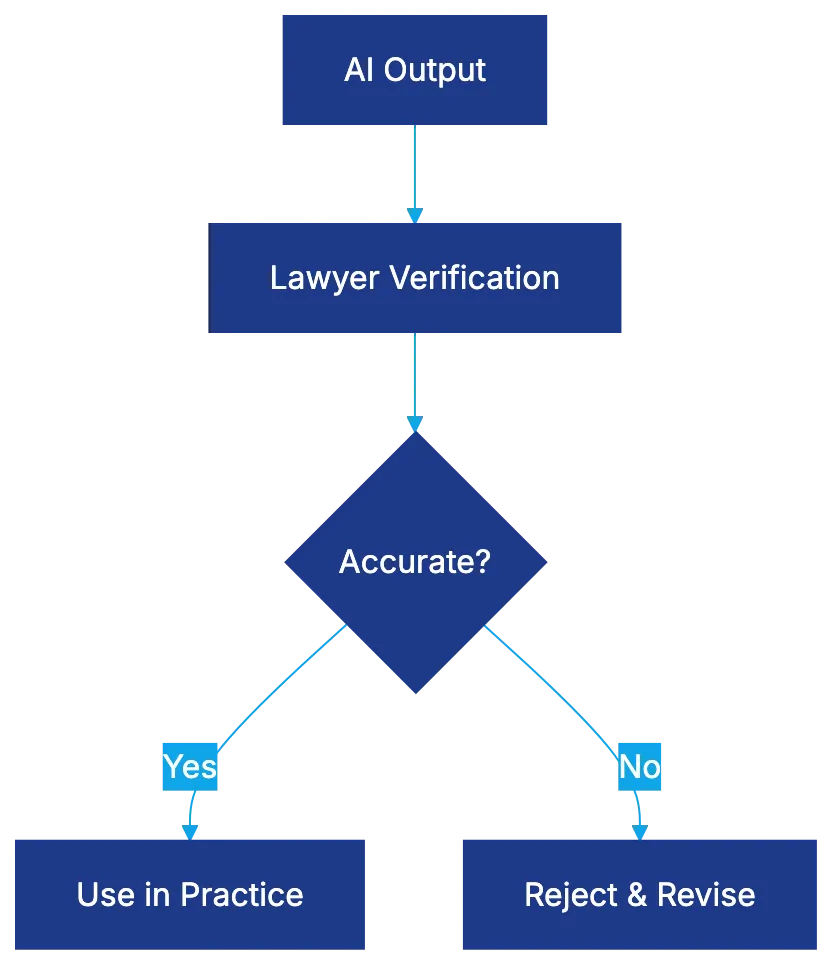

Steven Schwartz’s case in Mata v. Avianca highlighted AI hallucinations. Schwartz used ChatGPT for research, submitting non-existent cases to court, resulting in sanctions. The issue arises from large language models predicting words based on patterns, not retrieval. ABA Opinion 512 requires understanding AI functions.

AI Hallucination Risk Management:

Confidentiality Risks and Data Security Concerns

Rule 1.6 mandates protecting client confidences. AI technology creates vulnerabilities. Lawyers must ensure client data isn’t used for training by vendors, verifying vendor claims with due diligence. Questions about server locations, international data transfers, and encryption standards should be asked.

ABA guidance on informed consent is key. Clients should understand AI use risks. Risk-based AI policies can reflect task confidentiality levels.

Supervising AI Use: Rules 5.1 and 5.3 Obligations

ABA Opinion 512 treats AI as nonlawyer assistance under Rule 5.3. Supervisors must ensure AI tool users receive proper training. Policies governing AI use are needed, with potential ethical violations for inadequate supervision.

Candor to Tribunals: Rule 3.3 in the AI Age

Rule 3.3 requires candor to tribunals. AI-generated hallucinations can violate this rule. Verification of AI outputs is an affirmative duty for lawyers. Courts reject good faith reliance on AI, stressing professional responsibility.

Fee Considerations and Rule 1.5 Implications

AI Implementation Roadmap:

Rule 1.5 requires reasonable fees. AI’s effectiveness raises billing practice questions. Transparency about AI use is important for client negotiations. Investment in legal AI technology incurs costs that need recovery.

Rule 1.5 requires reasonable fees. AI’s effectiveness raises billing practice questions. Transparency about AI use is important for client negotiations. Investment in legal AI technology incurs costs that need recovery.

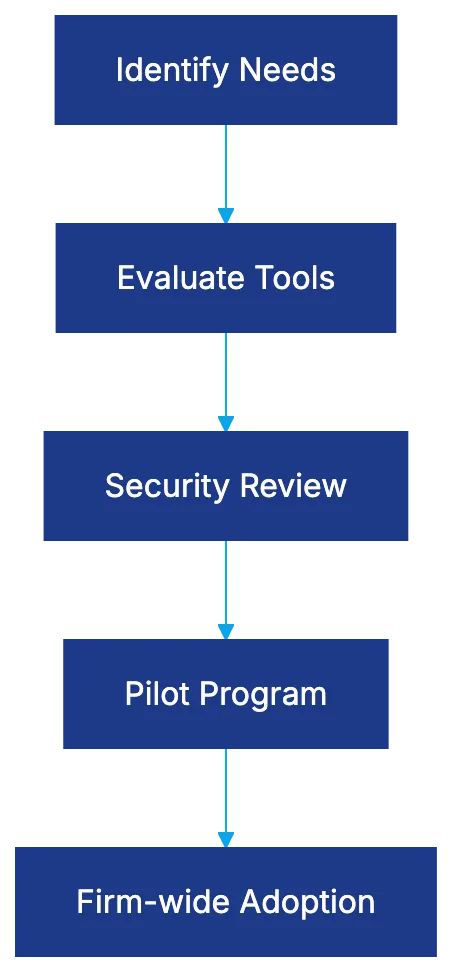

Implementation Strategies for Legal AI Adoption

Effective AI adoption requires strategy. Identify pain points, evaluate tools, insist on trials, check security, and integrate tools with existing systems. Pilot programs with clear metrics are essential before wider firm adoption.

Developing Complete AI Policies

ABA Opinion 512 calls for policies on artificial intelligence for attorneys. Policies should cover permissible use, consent requirements, verification, data security, training, and updates.

Success Stories: AI Implementation That Works

Examples of successful legal AI technology integration:

- Mid-sized Litigation Firm: AI-powered document review saved significantly on costs and time in a class action.

- Boutique Intellectual Property Firm: AI contract analysis improved draft speed and quality.

- Solo Practitioner: AI tools enabled greater client capacity and reduced costs.

Common Pitfalls and How to Avoid Them

Pitfalls include AI overreliance, inadequate vendor diligence, and lack of output verification. Lawyers must ensure all outputs are verified by someone with legal expertise.

The Future of AI in Legal Practice

AI technology will become more specialized and sophisticated. Expect standardized court disclosure requirements, increased regulatory oversight, and evolving client expectations.

Taking Action: Your AI Implementation Roadmap

Begin by reading ABA Opinion 512 and understanding Rule 1.1 obligations. Audit current AI use, develop or update policies, and evaluate AI tools for strategic implementation. Successful AI adoption involves careful, continuous effort.

Frequently Asked Questions

What is ABA Opinion 512 and why is it significant?

ABA Opinion 512 provides ethical guidance regarding the use of artificial intelligence in legal practice, particularly emphasizing the need for technology competence. It introduces specific responsibilities for lawyers related to generative AI and outlines how existing Model Rules apply, marking a critical development in legal ethics as technology integration in law continues to grow.

How can lawyers ensure they are complying with Rule 1.1 on technology competence?

Lawyers need to actively understand the capabilities and limitations of AI tools they employ. This involves keeping up with ongoing education, investigating how AI tools handle data, and ensuring they can appropriately verify the outputs generated by these systems.

What are the potential risks associated with using AI tools in legal practice?

There are several risks, including data security vulnerabilities, the potential for AI-generated inaccuracies (known as "hallucinations"), and challenges in maintaining client confidentiality. Lawyers must ensure proper vendor due diligence and establish risk-based policies to protect client information effectively.

What steps should a law firm take to implement AI tools effectively?

A law firm should start by identifying specific needs that AI can address, evaluate appropriate tools, and conduct a security review. Implementing a pilot program to test AI tools and measuring outcomes before firm-wide adoption is crucial for successful integration.

How do courts view the use of AI in legal practice?

Courts are increasingly requiring transparency regarding AI usage through specific disclosure orders. Non-compliance can lead to sanctions or case dismissals, which emphasizes the importance of adhering to judicial requirements while using AI tools.

What should lawyers be aware of concerning client confidentiality when using AI?

Lawyers must ensure that client data is not used without informed consent and must verify that third-party vendors comply with confidentiality requirements. It is crucial to ask about data storage, handling practices, and any potential data sharing involved in the use of AI tools.

What common pitfalls should lawyers avoid when adopting AI technology?

Common pitfalls include over-reliance on AI without proper verification, inadequate vendor diligence, and failure to establish comprehensive policies for AI usage. Lawyers should ensure that outputs are verified by qualified personnel and avoid using AI in a way that could compromise ethical duties or client relationships.

Related Articles

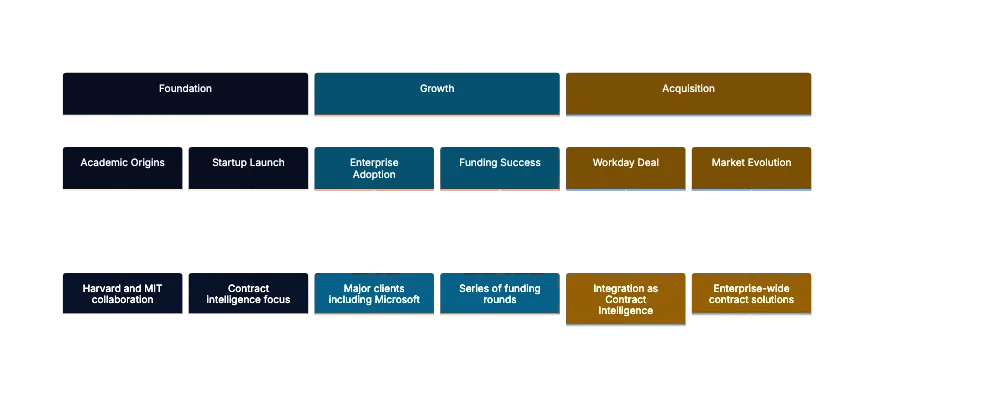

Evisort: Revolutionizing Contract Intelligence

Discover how Evisort's innovative contract solutions transform legal operations and integrate with enterprise systems post-acquisition.

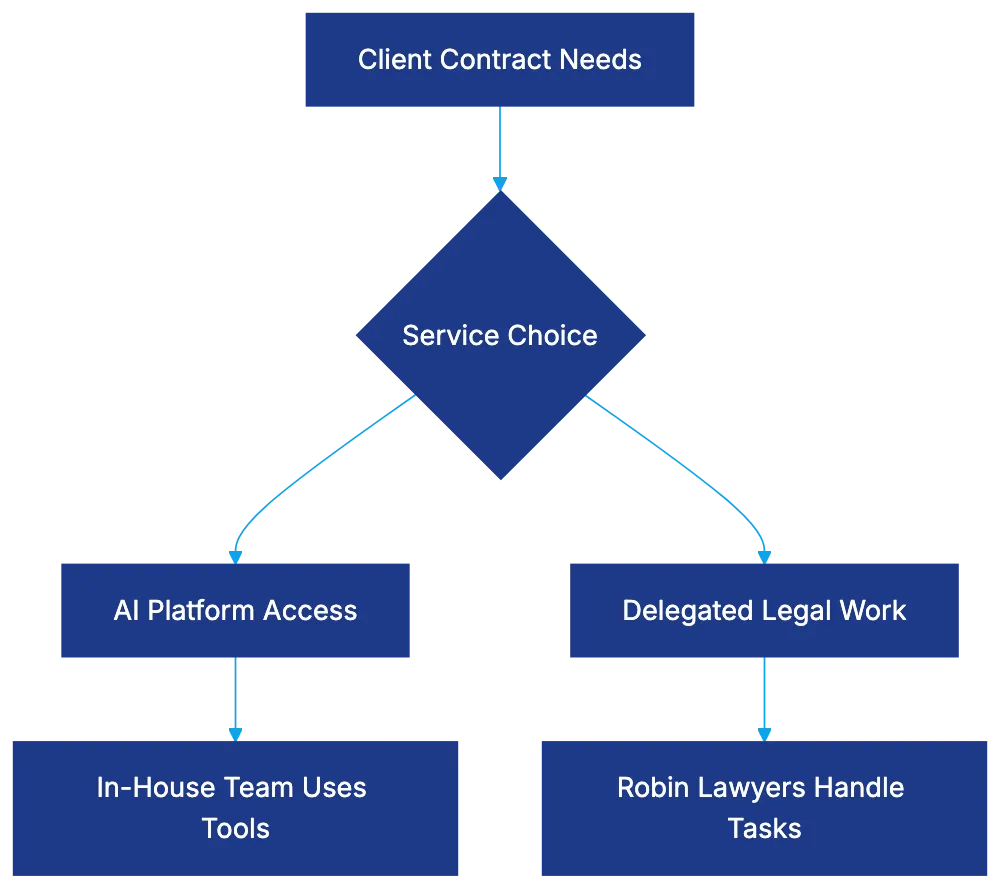

Robin AI: Transforming Legal Contract Review

Explore how Robin AI combines contract automation with legal expertise, redefining legal services in the UK market.

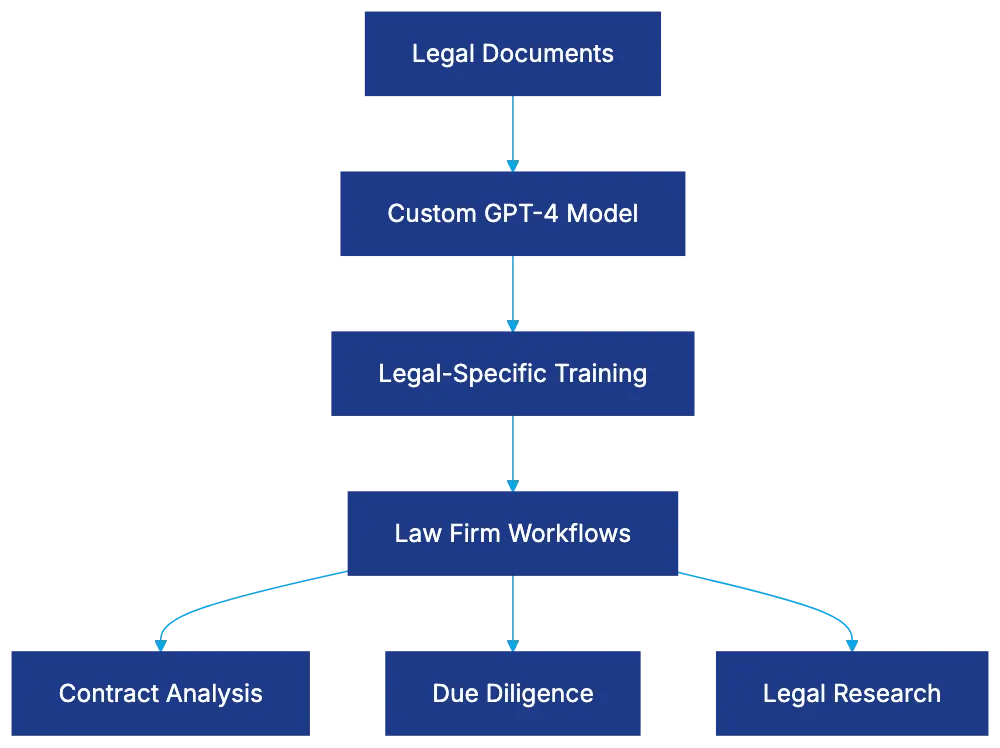

Harvey AI: Leading the Legal AI Investment Revolution

Discover how Harvey AI is transforming legal processes with advanced AI and substantial funding in the legal sector.